We ought to be doing everything we can to foster curiosity but we undervalue and misunderstand it.

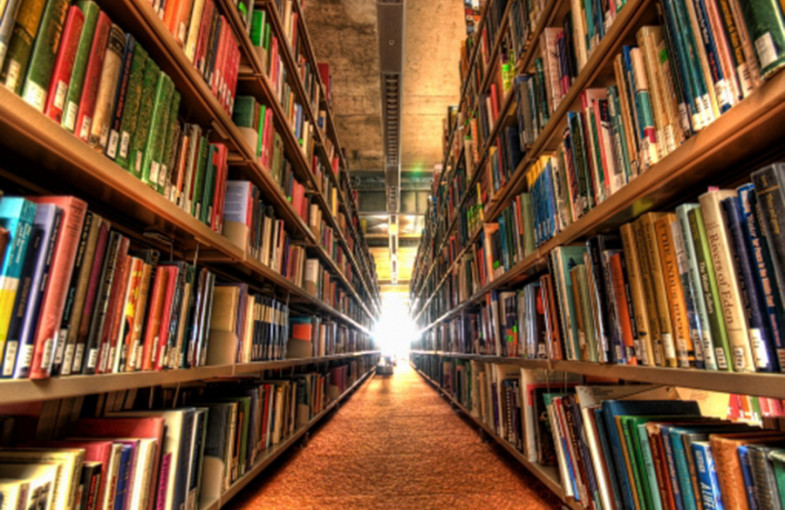

Before the internet and before the printing press, knowledge was the preserve of the 1 per cent. Books were the super yachts of 17th-century kings. The advent of the printing press and, latterly, the worldwide web has broken this monopoly. Today, in a world where vast inequalities in access to information are finally being levelled, a new cognitive divide is emerging: between the curious and the incurious.

Twenty-first-century economies are rewarding those who have an unquenchable desire to discover, learn and accumulate a wide range of knowledge. It’s no longer just about who or what you know, but how much you want to know. The curious are more likely to stay in education for longer. A hungry mind isn’t the only trait you need to do well at school, but according to Sophie von Stumm of Goldsmiths, University of London, it is the best single predictor of achievement, allied as it is with the other two quantifiably important traits: intelligence and conscientiousness.

Across the developed world, the cost of university is rising, but the cost of not going to university is rising faster. In the UK, according to a report commissioned by the Department for Business, Innovation and Skills, workers with degrees earn 27 per cent more than those with only A-levels.

Once at university, students find that individual differences in their cognitive ability flatten out (you had to be reasonably smart to get there) and an inner drive to learn becomes even more important. Curiosity can make the difference between classes of degree, and that matters: workers with Firsts or Upper Seconds earn on average £80,000 more over a lifetime than those with 2:2s or lower.

Students entering the workplace need to stay curious, because the wages for routine intellectual work, even in professional industries such as accountancy and law, are falling. Technology is rapidly taking over tasks historically performed by human beings, and it’s no longer enough to be merely competent or smart: computers are both. But no computer can yet be said to be curious. As the technology writer Kevin Kelly puts it, “Machines are for answers; humans are for questions.”

Industries are growing more complex and unpredictable and employers are increasingly looking for curious learners: people with an aptitude for cognitively demanding work and a thirst for knowledge. This applies even in areas not previously thought to be intellectually challenging. Curious people are often good at solving problems for their employers, because they’re really solving them for themselves. When confident that others are working on the same problem, most people cut themselves slack. Highly curious people form an exception to this rule.

Curiosity may be a fundamental human trait but intellectual curiosity is hard work. It requires a willingness to learn things that can seem pointless at the time but turn out to be useful later, and to perform boring tasks such as writing out equations over and over again. This is dependent on the guidance of adults and experts.

The web is just as likely to neuter curiosity as supercharge it. It presents us with more opportunities to learn than ever before, and also to watch endless videos of kittens. Those who acquire the habits of intellectual curiosity early on will use computers to learn throughout their lives; those who don’t may find they are replaced by one, having had their curiosity killed by cats.